What is Facebook DeepFace?

Facebook has cooked up facial verification software that will match faces almost to the level humans can. Aside from the obvious tagging implications, it'll be interesting to see what Facebook does with its artificial intelligence tools.

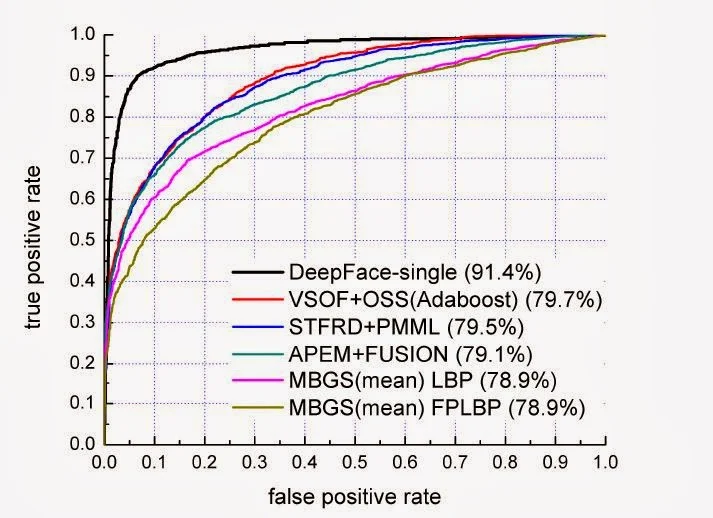

The software, highlighted in MIT's Technology Review, is also outlined in a research paper by three researchers at Facebook's artificial intelligence group and one from Tel Aviv University. The punch line is that Facebook can now recognize faces with 97.25 percent accuracy using a so-called deep learning system, which uses networks of simulated neurons to spot patterns in data.

According to Facebook's research paper abstract:

"In modern face recognition, the conventional pipeline consists

of four stages: detectàalignàrepresentàclassify. We revisit

both the alignment step and the representation step by employing explicit 3D

face modeling in order to apply a piecewise affine transformation, and derive a

face representation from a nine-layer deep neural network. This deep network

involves more than 120 million parameters using several locally connected

layers without weight sharing, rather than the standard convolutional layers.

Thus we trained it on the largest facial dataset to-date, an identity labeled

dataset of four million facial images belonging to more than 4,000 identities,

where each identity has an average of over a thousand samples. The learned

representations coupling the accurate model-based alignment with the large

facial database generalize remarkably well to faces in unconstrained

environments, even with a simple classifier. Our method reaches an accuracy of

97.25% on the Labeled Faces in the Wild (LFW) dataset, reducing the error of

the current state of the art by more than 25%, closely approaching human-level

performance."

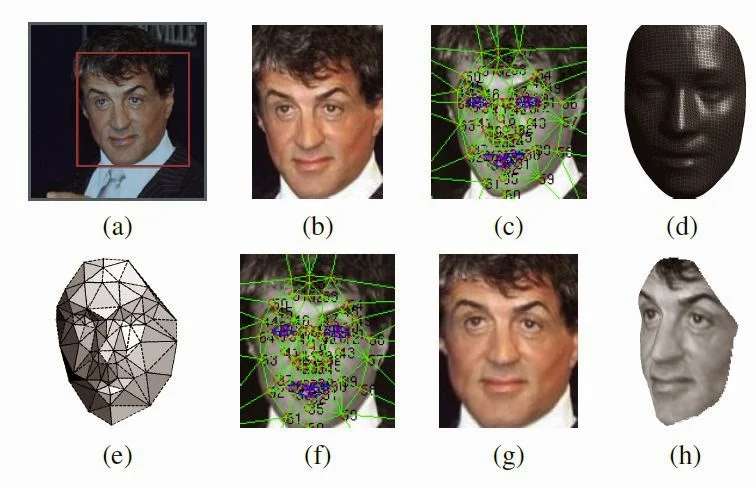

Deepface Alignment pipeline.

(a) The detected face, with 6 initial fiducial points.

(b) The induced 2D-aligned crop.

(c) 67 fiducial points on the 2D-aligned crop with their corresponding Delaunay triangulation, we added triangles on the contour to avoid discontinuities.

(d) The reference 3D shape transformed into the 2D-aligned crop image-plane.

(e) Triangle visibility w.r.t. to the fitted 3D-2D camera; black triangles are less visible.

(f) The 67 fiducial points induced by the 3D model that is using to direct the piece-wise affine wrapping.

(g) The final frontalized crop. (h) A new view generated by the 3D model (not used in this paper).

Outline of the DeepFace architecture.

- A front-end of a single convolution-pooling-convolution filtering on the rectified input, followed by three locally-connected layers and two fully-connected layers.

- Colors illustrate outputs for each layer.

- The net includes more than 120 million parameters, where more than 95% come from the local and fully connected layers.

Computational efficiency

The research team have efficiently implemented a CPU-based feedforward operator, which exploits both the CPU’s Single Instruction Multiple Data (SIMD) instructions and its cache by leveraging the locality of floating-point computations across the kernels and the image. Using a single core Intel 2.2GHz CPU, the operator takes 0.18 seconds to fully run from the raw pixels to the final representation. Efficient warping techniques were implemented for alignment; alignment alone takes about 0.05 seconds. Overall, the DeepFace runs at 0.33 seconds per image, accounting for image decoding, face detection and alignment, the feedforward network, and the final classification output.

So far, this project is being put forward as mostly an academic pursuit,in a research paper released last week, and the research team behind it will present its findings at the Computer Vision and Pattern Recognition conference in Columbus, Ohio in June. Still, it has tremendous potential for future application, both for Facebook itself and in terms of its ramifications for the field of study as a whole.

Sources: Facebook

0 comments